I am currently a Master’s student in Information and Communication Engineering at Northwestern Polytechnical University (NPU). I am a member of Shaanxi Provincial Key Laboratory of Information Acquisition and Processing within the School of Electronics and Information, where I am supervised by Prof. Yifan Zhang and Prof. Shaohui Mei. My research primarily focuses on computer vision, with specific interests in image super-resolution and image generation.

I earned my Bachelor’s degree in Telecommunication Engineering from the Faculty of Information Science and Engineering at Ocean University of China (OUC).

📖 Educations

- 2023.09 - 2026.03 (expected), Northwestern Polytechnical University, Xi’an, Information and Communication Engineering.

- 2019.09 - 2023.06, Ocean University of China, Qingdao, Telecommunication Engineering.

🔥 News

- 2025.08: 🎉🎉 I presented my paper “HSCT: Hierarchical Self-Calibration Transformer for Hyperspectral Image Super-Resolution” as an Oral presentation at IGARSS 2025 in Brisbane, Australia.

- 2025.04: 🎉🎉 Our paper “HSCT: Hierarchical Self-Calibration Transformer for Hyperspectral Image Super-Resolution” was accepted as an Oral presentation at IGARSS 2025!

📝 Publications

📃 Papers

🛰️ Multi-Stage Grouped-Aggregation Transformer with Large-Window Feature Learning for Hyperspectral Image Super-Resolution

Jinliang Hou, Yifan Zhang, Shaohui Mei.

📄 Submitted to IEEE Transactions on Geoscience and Remote Sensing (TGRS) — Under Review

🔗 Manuscript Preview

- 📄 Paper Introduction

We propose a novel method for hyperspectral image super-resolution (HSR) based on a Multi-Stage Grouped-Aggregation Transformer with Large-Window Feature Learning. The method is designed to address the challenges of insufficient feature representation and inefficient modeling of high-dimensional spectral data by progressively extracting and reconstructing features across stages while effectively capturing global spatial dependencies.

- 🚀 Key Contributions

-

Multi-Stage Feature Extraction and Reconstruction Framework

-

Grouped-Aggregation Strategy for High-Dimensional Spectral Data

-

Transformer Architecture Adapted for Large-Window Feature Learning

- ✅ Experimental Results

To release

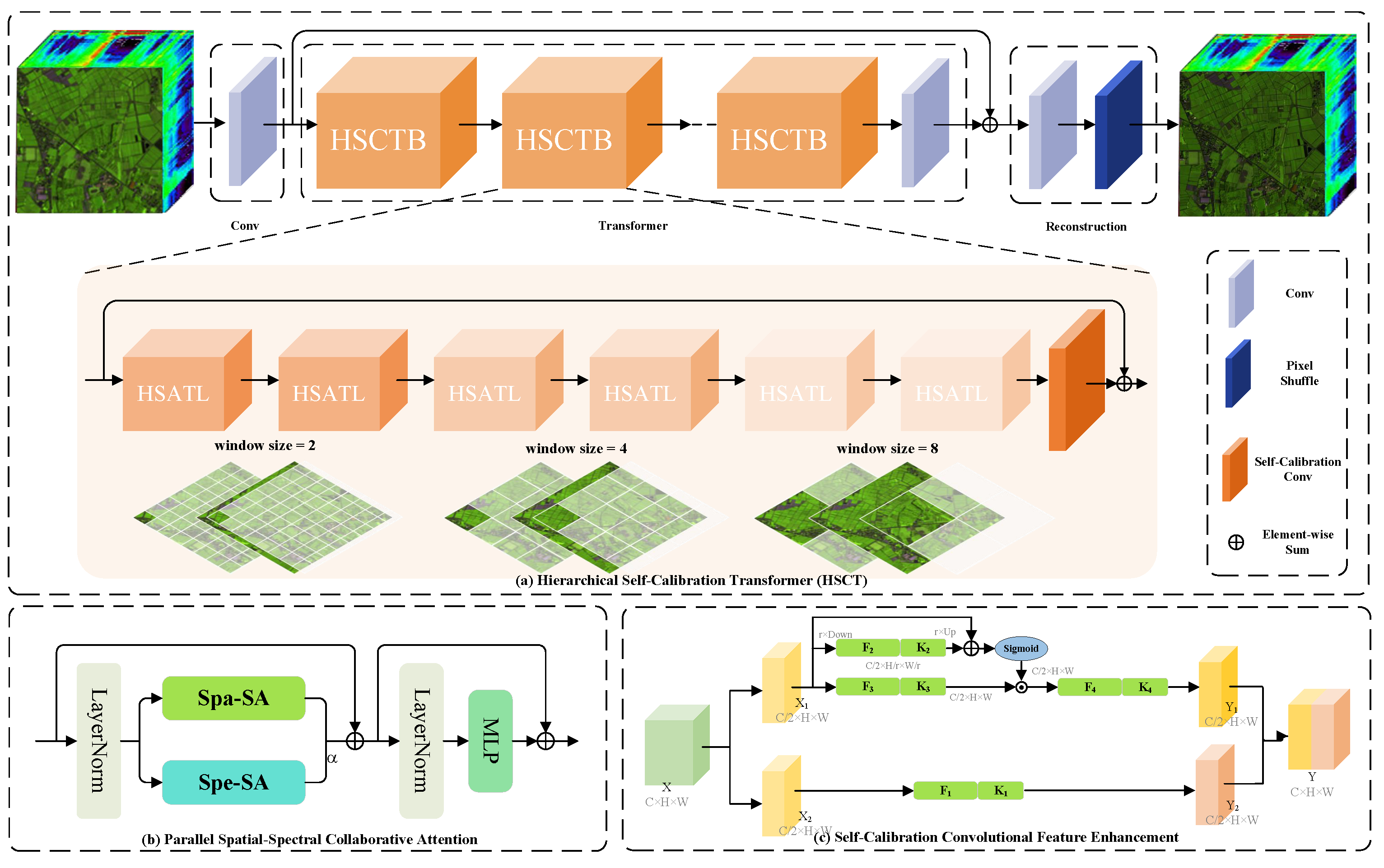

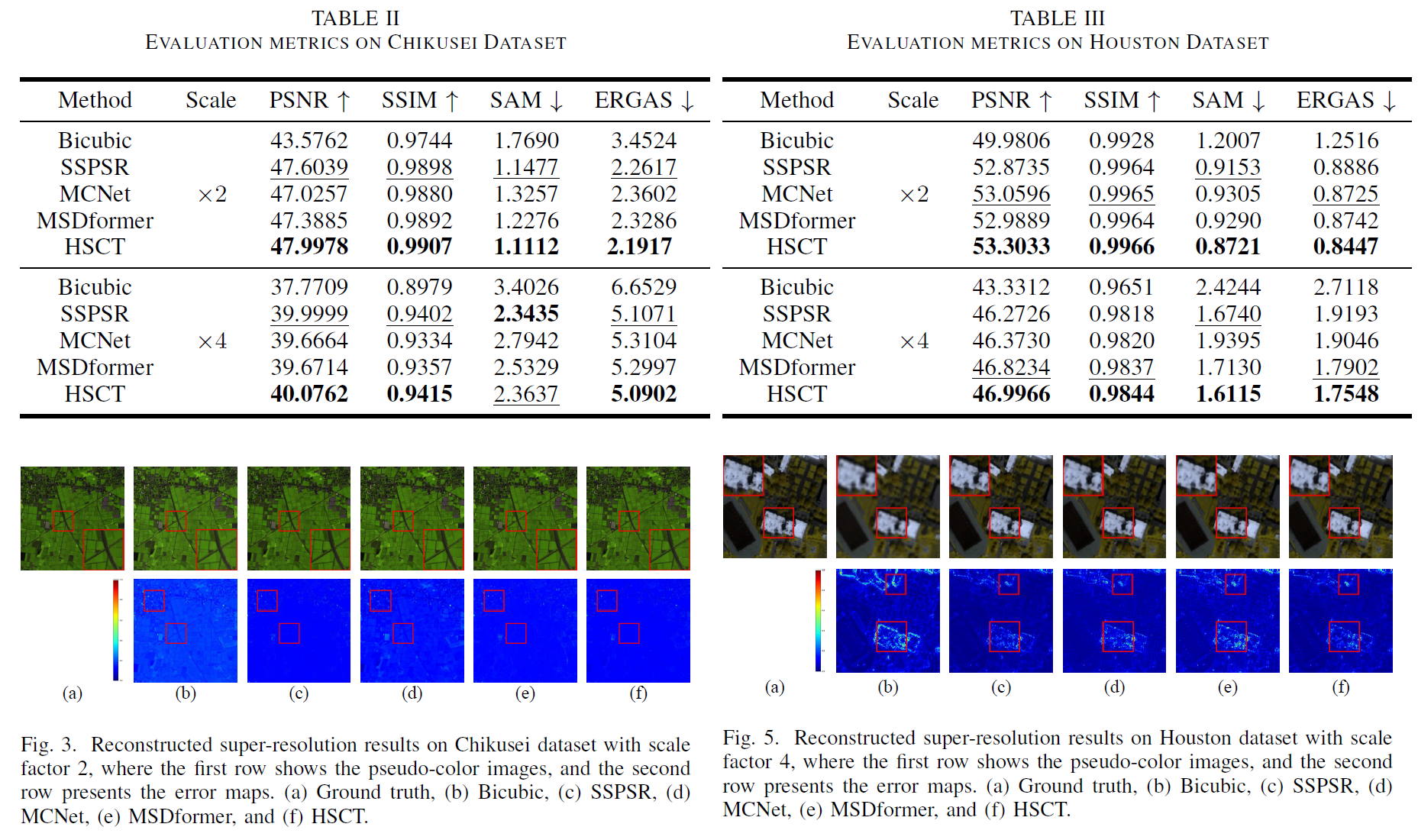

🛰️ HSCT: Hierarchical Self-Calibration Transformer for Hyperspectral Image Super-Resolution

Jinliang Hou, Yifan Zhang, Yuanjie Zhi, Rugui Yao, Shaohui Mei.

🎤 Accepted as an Oral Presentation at IGARSS 2025

📄 View Paper

- 📄 Paper Introduction

We propose a novel method named Hierarchical Self-Calibration Transformer (HSCT) for hyperspectral image super-resolution (HSR). This work addresses the limitations of existing approaches in capturing multi-scale spatial structures and integrating spatial-spectral information effectively. HSCT is designed to enhance both spatial detail and spectral fidelity through hierarchical modeling and coordinated feature learning.

- 🚀 Key Contributions

-

Hierarchical Variable-Window Self-Attention A multi-stage Transformer framework with progressively varying window sizes is designed to capture spatial features from local to global scales, enabling flexible and effective multi-scale representation.

-

Parallel Spatial-Spectral Collaborative Attention A dual-branch attention mechanism is introduced to model spatial and spectral dependencies in parallel, facilitating effective spatial-spectral feature fusion and reducing spectral distortion.

-

Self-Calibration Convolutional Feature Enhancement A lightweight self-calibrated convolution module is embedded to enhance local feature stability and robustness, improving fine-grained representation in shallow layers.

- ✅ Experimental Results

Extensive experiments on benchmark hyperspectral datasets (e.g., Chikusei, Houston) demonstrate the superiority of HSCT over state-of-the-art methods in terms of PSNR, SSIM, SAM, and ERGAS.

💻 Internships

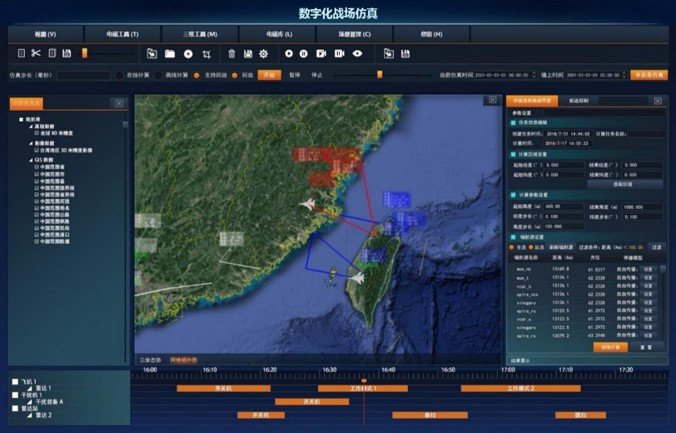

China Electronics Technology Group Corporation Information Science Academy, Beijing, China. (2025.06 - 2025.07)

The internship centered on the Large Model-Driven Intelligent Command Decision-Making and Adversarial Simulation System. This cutting-edge project leveraged large language models (LLMs) to enhance various facets of military operations, spanning LLM training, fine-tuning, and deployment; intelligence data analysis, knowledge graph construction, and RAG integration; situational awareness and target threat assessment; firepower planning scheme generation; and wargaming and simulation.

Large Language Model Applications for Fire Planning:

- Played a central role in the argumentation phase of a large language model-based fire planning project, meticulously assessing the feasibility and challenges of key technical routes. This included commander preference-based tactical recommendations, intelligent scheme matching and modification, and agent-based scheme generation.

- Conducted technology analysis and comparative studies, identifying potential opportunities and technical bottlenecks for large model applications in complex military decision-making scenarios. My work provided strategic and scientific argumentation, laying a solid foundation for the project’s subsequent research and development direction.

🔎 Projects

Research on Collaborative Multi-Modal Intelligent Recognition and Tracking Technology for XX

This project focuses on the research of intelligent target recognition and tracking technology in multi-platform, multi-modal collaborative scenarios, aiming to enhance the system’s intelligent perception and decision-making capabilities in complex environments. By introducing deep learning-based object detection and multi-object tracking algorithms, we achieve efficient fusion and processing of multi-source heterogeneous data, supporting stable operation on resource-constrained platforms. This provides critical technical support for multi-platform collaborative operations and intelligent monitoring.

Finished Works:

- We integrate multi-platform and multi-modal data sources to construct a unified object detection and tracking processing framework, realizing information fusion and enhanced perception.

- For different platform resource constraints, we implement and deploy mainstream detection algorithms such as RTMDet and Deformable DETR, balancing detection accuracy with computational efficiency.

- We implement advanced tracking algorithms like DeepSORT and ByteTrack to achieve stable target association and trajectory maintenance in complex scenarios.

- Algorithm optimization and deployment tests are conducted on various computing platforms to verify the system’s real-time performance, robustness, and collaborative perception capabilities across multiple scenarios.

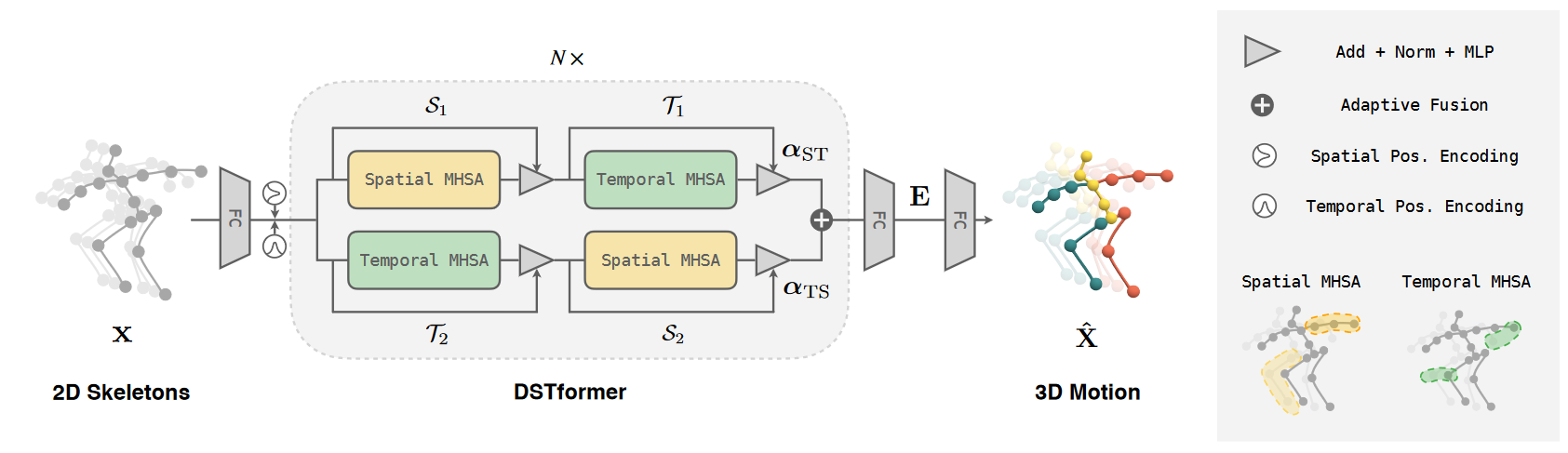

Research on Pre-trained Transformer Models for 3D Pose Estimation

This project aims to implement and evaluate deep learning-based methods for 3D human pose estimation. By reproducing the recently proposed MotionBERT model, training and testing it on the Human3.6M dataset, we explore its performance in 3D pose reconstruction. This method utilizes a combination of pre-training and fine-tuning to recover human pose in 3D space from 2D skeleton sequences, showing broad prospects in applications such as action recognition, human-computer interaction, and virtual reality.

Finished Works:

- We conducted in-depth research into mainstream methods in 3D human pose estimation, ultimately selecting MotionBERT as our experimental model. We thoroughly understood its pre-training + fine-tuning framework and its DSTformer encoder structure.

- We reproduced the MotionBERT method on the Human3.6M dataset, implementing the model’s training and testing pipeline, including data preprocessing, network architecture configuration, and training parameter settings.

- The experimental results of MotionBERT were compared against mainstream methods such as VideoPose3D, UGCN, and PoseFormer, using MPJPE (Mean Per Joint Position Error) as the evaluation metric to validate its superiority.

- Finally, we visualized and analyzed the experimental results, highlighting MotionBERT’s advantages in terms of pose estimation accuracy and robustness, while also discussing its limitations and potential future optimization directions.

Airborne Fire Control Radar Anti-Jamming Performance Simulation System

This project developed a mission-oriented avionics electronic countermeasures (ECM) simulation system to enhance radar anti-jamming capabilities in modern aerial combat. The system provides multi-platform, multi-target radar performance modeling and evaluation, supporting flexible jamming scenario configuration and algorithm extensions. It offers realistic, controllable, and reproducible technical support for air force combat training, equipment demonstration, and countermeasure strategy research. This platform improves radar system evaluation efficiency and battlefield adaptability in highly contested environments, laying the groundwork for systematic design and real-world performance validation of next-generation airborne radar.

Finished Works:

- Developed virtual radar subsystem models covering signal generation, echo simulation, clutter/jamming, and anti-jamming processing across air-to-air, air-to-ground, and air-to-sea modes. Integrated mainstream anti-jamming algorithms (STAP, sidelobe cancellation) and provided real-time signal visualization and effect evaluation.

- Designed an interactive human-machine interface for combat drills and training, enabling human-in-the-loop simulation control and flexible mission configuration for interventional teaching.

- Developed and validated a target tracking and data fusion module, creating configurable aerial target motion models for accurate echo simulation. Integrated and optimized typical tracking algorithms (Kalman filter, JPDA) for robust multi-target tracking. Developed a cooperative data fusion module for multiple radar platforms using Bayesian estimation and neural networks, significantly boosting tracking robustness and evaluation in complex environments.

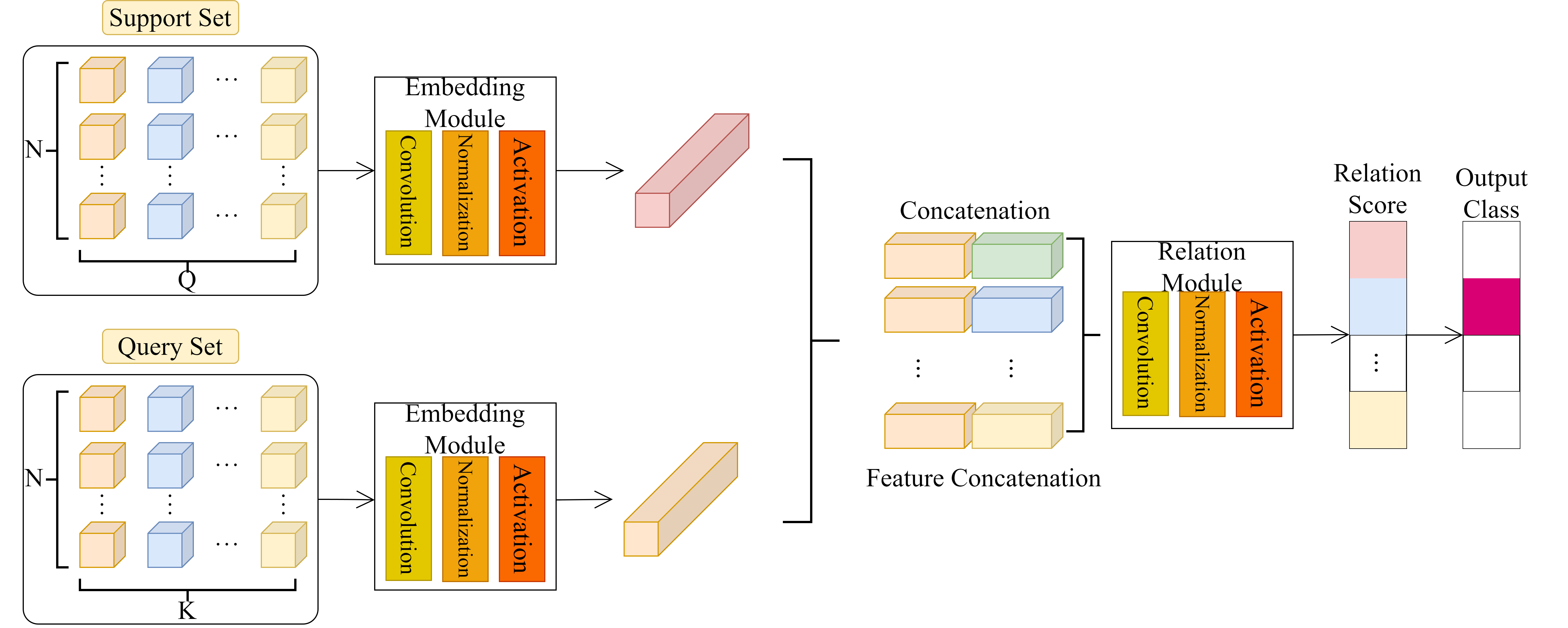

Research on Hyperspectral Image Classification Techniques under Few-Shot Conditions

This project tackles Hyperspectral Image (HSI) classification with limited samples, aiming to reduce reliance on large labeled datasets. We use a Meta-Learning approach to enable models to adapt quickly and generalize across tasks, achieving robust pixel-level classification even with scarce data. Our framework combines 3D spatio-spectral features with relational reasoning to leverage HSI’s unique structure. Experiments on public datasets show superior accuracy with minimal labeled samples, demonstrating strong robustness and transferability. This research provides a practical solution for few-shot HSI interpretation, laying the foundation for generalization, lightweight deployment, and data-efficient learning in remote sensing applications.

Finished Works:

- We designed an end-to-end Few-Shot Learning classification model based on meta-learning principles. This significantly enhances the model’s generalization capability in scenarios with extremely limited samples.

- We utilized 3D Convolutional Neural Networks (3D-CNN) to extract both spatial and spectral features from hyperspectral images. This effectively uncovers the inter-dimensional relationships within the data.

- We incorporated a deep Relation Network that uses 2D convolutional modules to compute relational scores between samples. This enables pixel-wise class prediction, boosting discriminative performance under few-shot conditions.

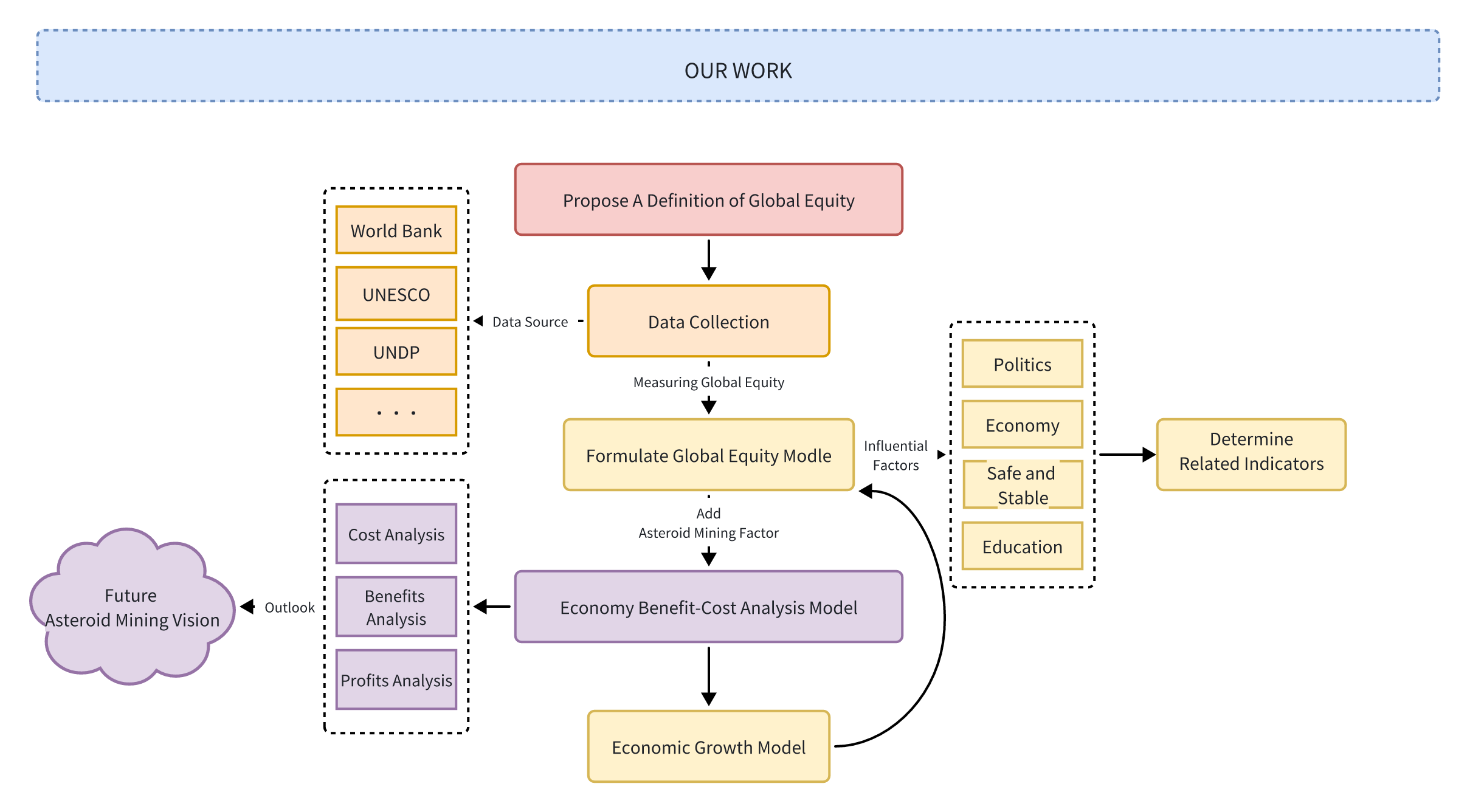

2022 Mathematical Contest in Modeling (MCM)

In the 2022 Math Modeling Contest, our team built a comprehensive and quantifiable model for “Global Equity Assessment.” We measured national equity across resource access, economic development, and environmental responsibility. Our work also explored how external factors, like future asteroid mining, might impact this global balance. Using systems thinking and various mathematical techniques, we developed an interpretable quantitative framework covering indicator design, data clustering, and causal modeling. This project highlighted our interdisciplinary modeling skills and our commitment to applying theoretical models to real-world policy and governance challenges. It provides a strong foundation for quantifying international equity and informs future global resource allocation strategies.

Finished Works:

- Multi-dimensional Assessment with AHP: We designed a multi-dimensional global equity assessment system using the Analytic Hierarchy Process (AHP). This resulted in the Global Equity Index (GIEI), providing a comprehensive score for each country.

- National Classification via K-means++: We applied the K-means++ clustering algorithm to categorize countries. This helped us uncover distribution features and cluster patterns across different equity levels.

- Coupling Economic Growth with Resource Development: Our model integrates resource development factors, like mining revenue, into an economic growth framework. This allowed us to build a coupling model of equity and economic interests.

- Sensitivity Analysis for Robustness: We conducted sensitivity tests on key weights and parameters within the model. This evaluated how variable changes impact the GIEI and classification results, thereby enhancing the model’s robustness and interpretability.

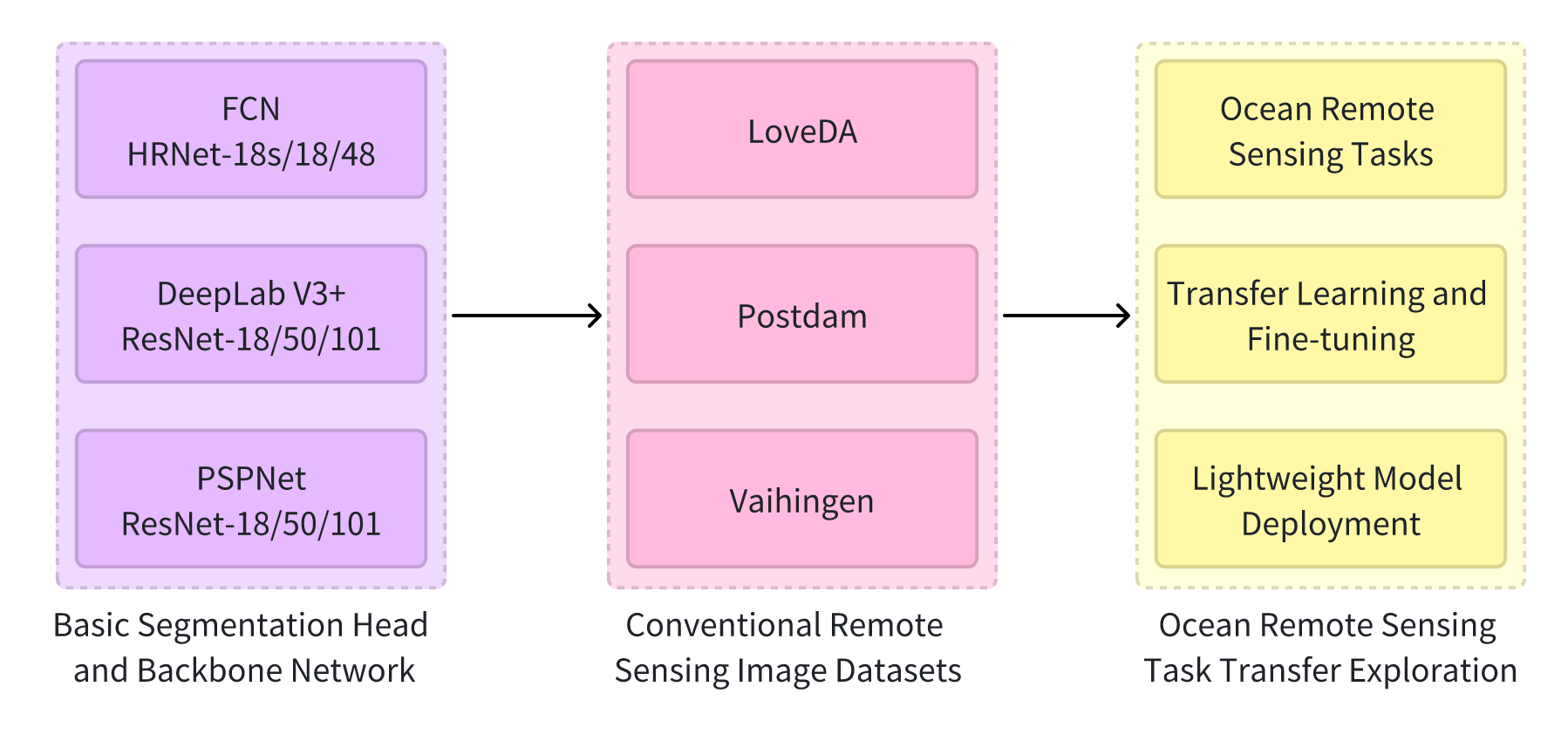

Systematic Study of Deep Segmentation Architectures for Remote Sensing with Insights into Maritime Transferability

This project focuses on semantic segmentation of remote sensing images, systematically evaluating the adaptability and performance of current deep learning segmentation methods across various remote sensing scenarios. Our goal is to provide theoretical support and methodological guidance for future model transfer and structural fine-tuning in marine remote sensing image segmentation. We specifically investigated the models’ capabilities in preserving spatial structure, refining boundaries, and modeling inter-class differences. We also explored their transferability in practical marine applications, especially concerning few-shot learning and inter-class confusion challenges.

Finished Works:

- Systematic Evaluation of Mainstream Architectures: We conducted a comprehensive evaluation of three representative semantic segmentation architectures: FCN (with HRNet backbone), DeepLabV3+ (with ResNet backbone), and PSPNet (with ResNet backbone). Our experiments spanned three typical remote sensing datasets (LoveDA, Potsdam, and Vaihingen), involving 27 model training and evaluation tasks to thoroughly compare their performance in remote sensing scenarios.

- Analysis of Architectural Adaptability: We systematically analyzed the adaptability differences of these semantic segmentation architectures in remote sensing images. This revealed their performance limitations in complex backgrounds and multi-scale object scenarios, providing a reliable benchmark assessment and model selection reference for few-shot semantic segmentation tasks in marine remote sensing.

- Preliminary Framework for Cross-Domain Transfer: We established a preliminary framework for model structure analysis and adaptability specifically designed for transferring from general remote sensing to marine remote sensing tasks. This offers a systematic theoretical foundation and practical support for future work in key areas such as transfer learning, structural fine-tuning, and lightweight deployment.

🏆 Honors and Awards

🏅 Honors

- 2024.09, Northwestern Polytechnical University Master’s Second-Class Scholarship

- 2023.09, Northwestern Polytechnical University Master’s First-Class Scholarship

- 2022.09, Ocean University of China Comprehensive Third-Class Scholarship

- 2021.09, Ocean University of China Social Practice Scholarship

🎏 Competitions

- 2022.05, Mathematical Contest in Modeling (MCM), Meritorious Winner

- 2020.12, Shandong Province College Student Physics Competition, Third Prize

💼 Societies

- 2025.08, 2025 IEEE International Geoscience and Remote Sensing Symposium (IGARSS 2025) • 3-8 August 2025 • Brisbane, Australia

- 2024.12, Reviewer for IEEE Transactions on Geoscience and Remote Sensing

- 2024.11, The 5th China International SAR Symposium (CISS 2024), Xi’an, China

- 2024.05, Chinese Congress on Image and Graphics (CCIG 2024), Xi’an, China